Spotlight on Explainable AI

March 13, 2023

The financial services industry has experienced a significant increase in the adoption of artificial intelligence (AI) in recent years and this is just the beginning. A 2022 Economist Intelligence Unit survey of IT executives in banking found that 85% have a “clear strategy” for adopting AI in the development of new products and services. Top areas for future growth in the next 1-3 years includes personalising investment, credit scoring and portfolio optimization.*

AI can provide significant benefits by boosting efficiencies, cutting repetitive processes, increasing accuracy, and avoiding human error. And when it comes to interpreting the ever-growing amount of data, AI can offer real added value in decision-making. But whilst AI can bring huge benefits, it is not without controversy. There are concerns around bias, accountability, and explainability that need to be addressed, especially in the financial industry where decisions based on AI can have significant impacts.

The lack of transparency and the prevalence of black box models have been key challenges to AI adoption across the industry. This is why it is essential to have explainable AI (XAI) approaches that allow users to understand the rationale behind analyses, so they can make informed decisions rather than blindly relying on machine learning models.

What is XAI?

XAI refers to a set of techniques and methods that enable the development of AI systems that can be easily understood and interpreted by humans. The goal of XAI is to build AI models that can explain their decisions, actions, and reasoning processes in a way that is transparent, trustworthy, and easy to understand for users, stakeholders, and regulatory authorities.

The need for XAI arises from the fact that many AI algorithms are complex and opaque, making it difficult for humans to understand how they arrive at their conclusions. XAI seeks to address this problem by designing AI models that can provide explanations or justifications for their decisions, such as highlighting which features of the data were most important in making a prediction. In addition, XAI can help mitigate issues related to bias, discrimination, and fairness in AI by enabling users to identify and correct any biases or errors in the training data or the model itself.

Scorable: XAI in Action

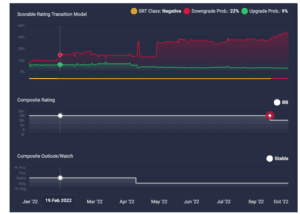

bondIT’s Credit Analytics platform, Scorable uses XAI to predict the downgrade & upgrade probability of 3,000 corporate and financial issuers worldwide within a 12-month time period. As you can see in the example below, Scorable predicted the rating downgrade of a US corporate issuer several months ahead of time. In mid-February, Scorable’s Rating Transition (SRT) model detected an increase in credit risk, with the issuer’s downgrade probability rising to over 20 percent nearly 2 months before the outlook changed to negative and 7 months before the rating downgrade occurred.

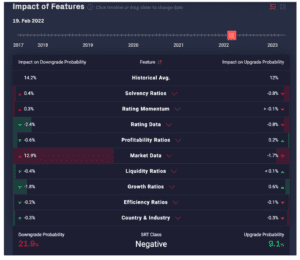

Scorable’s XAI approach supports transparency and allows users to understand the drivers behind its risk assessments. In the “Impact of Features” section, users can see which variables are contributing to and detracting from upgrade and downgrade probabilities, and to what degree. In the case of this issuer, the key drivers were Market Data, including exchange rates and equity prices, followed to a lesser extent by Solvency Ratios.

Want to find out more about the benefits Scorable offers to investors? Contact our team for more info.

* Economist Intelligence Unit 2022: “Banking on a game-changer: AI in financial services”